The Cosmological Constant is a fudge & Hubble Redshift is just curvature also the Vacuum Catastrophe

© Miles Mathis It will be shown that the cosmological constant is just a fudge factor used to fill an "instability" in the field equations. This instability is the same instability that is explained in the paper Solution to the ellipse problem of Kepler's orbit. One, the current cosmological model is called Lambda CDM, named for the cosmological constant and "Cold Dark Matter." Lambda [Λ] is Einstein's own cosmological constant. Einstein wasn't convinced of it, but current theory has found it necessary to have a small positive value for Lambda, as Einstein first hypothesized. What Lambda tells us—as its main theoretical addition to General Relativity—is that space is expanding. That looks very much to me like an expansion theory. Now, what does the expansion apply to, precisely? It applies to the fabric of space. What is space? According to current theory the answer is either "nothing" or "I don't know." So current theory assigns expansion either to nothing or to "I don't know." Why does it do this? Does it at least have a good reason? It has the same reason that Einstein had: to make the math work out. You see, in Lambda CDM it is the math that is primary. This is the way physics now operates. First you create a mathematics to express a lot of disparate data and then you try to come up with a theory afterwards to slide under the math. It is sort of like building the walls and ceiling of a house first and then trying to slide the foundation in at the end. Either with houses or with physics, it can be a headache.

Einstein needed Lambda because he wanted the universe to be in a state of equilibrium. Then it was discovered by Hubble that the universe appeared to be expanding. So Einstein jettisoned Lambda with a red face. However, a thousand discoveries and mathematical manipulations later, the latest physicists have decided they need Lambda, and what is more, they need a tiny Lambda like Einstein first gave them. They say, "Lovely and double lovely, since we can now save our math and keep Einstein to browbeat our enemies with at the same time."

One thing that Einstein didn't see and that no one appears to have noticed since is that Hubble's expansion and Einstein's expansion are two totally different concepts. The expansion of the cosmological constant is given to space itself. The expansion of Hubble is an expansion of the universe. The universe can easily expand without space expanding. If material objects move apart generally, then the universe is expanding, since the universe is defined as the sum of the material objects. But space expanding is a much different, and much more revolutionary, proposal. If space is expanding, then objects can move apart without moving locally. They can have no local velocity and the universe will expand simply because all objects are connected to space.*

So the discovery of Hubble need not have concerned Einstein at all. True, it contradicted the end product of his math. But it did not necessarily contradict the cosmological constant. Space expanding and matter moving away from matter are two entirely different concepts.

Since there was no evidence for space itself expanding, the logical thing to do once Einstein accepted the findings of Hubble would have been to ditch both the cosmological constant and all the math that led up to it. The reason Einstein proposed the constant in the first place is that the body of his math showed that the universe should be shrinking. If he accepted the findings of Hubble, then he could not accept the findings of his math. Instead he just got rid of the constant and kept the rest.

Since then theorists have tinkered with the math and the axioms until they achieved, at long last, an implied expansion to match Hubble. But there is still disagreement between quantum theorists and Relativity specialists on exactly which tensors and fields are correct. The basic answer, as of this time in history, is that no one knows. It is a huge mess. Lambda CDM is the current model, but no one would be surprised if it were overthrown tomorrow by a modified Riemann field of some sort. There are almost as many models as there are theoretical physicists.

So now we have both an expanding space and an expanding universe. As far as space expanding goes, no one seems to have a problem with giving a motion to nothing, since the theory came with a lot of impressive math. It takes years to learn all the math, and this must mean that it is right. Anyone who takes the time to learn such a math is not going to be stopped by any little logical contradiction like assigning motion to the void. Assigning expansion to matter is seen as ludicrous, especially when it doesn't come decorated with any new maths. But assigning motion to the void is fine. You could get the physics world to accept that God made the universe out of used golf balls and chocolate pudding if you could prove it with Riemannian tensors and other big-named variables and fields.

All this goes to say that currently accepted theory contains an expansion theory. In fact, it contains two expansion theories: 1) the universe is expanding, 2) space is expanding. These two expansions are not equivalent. They are two separate concepts.

It is also interesting to note that the expansion of space creates a pressure on matter. Lambda CDM gives the void not only motion but pressure. To exert this pressure, the theory invents any number of scenarios, all of which give material characteristics to space. Of course this begs several questions. One: if the void is made up of particles or strings or any other "things", then it is no longer the void, right? In which case we are not assigning expansion to the void, we are assigning it to as yet unknown forms of matter. Two: to allow for motion we must still have some void left among all these new strings and ghost particles. Otherwise we beg the paradox of Parmenides, where we have a block universe frozen in place. Since the new theories go beyond Einstein in proposing new "things" in the void, do these theories propose that expansion be given to the new things or to the void that is left? If to the void, then we are in an infinite regress: assigning characteristics to the void makes it material, and we must propose even more evanescent particles or strings. If the expansion is given to the new things, then we have a double standard. Outsiders assigning expansion to matter are kooks; insiders assigning expansion to matter are brilliant theorists.

Another question is begged: If we are going to assign expansion to matter (as it appears that current theory must admit that it does) then wouldn't it be far simpler and more elegant to assign it to matter that we already know exists? We don't need to make up new matter to assign expansion to. We already have matter that we can assign expansion to. All we have to do is assign it in a consistent way.

This is made clear by the whole idea of space pressure. Instead of proposing that space causes a pressure in on matter, wouldn't it be more tidy to propose that matter causes a pressure out on space? It could do this simply by expanding. Then you don't need expanding space and new undetectable matter; you only need the matter we have and an expansion that we can already assign to gravity. One stone begins to kill so many birds it is embarrassing. Pressure in and pressure out are empirically equivalent. Why not choose the simpler theory?

To give another example of how few switches you have to throw to turn the absurdities of current theory into the beauties of my theory, consider that it is not only Einstein and General Relativity that parallel expansion theory, it is also QED. Paul Dirac famously proposed that the Gravitational Constant [G] (not to be confused with the cosmological constant) was changing over time. This would mean that matter and space were changing relative to each other. Dirac was not afraid of making proposals of this sort, and neither was Richard Feynman, another quantum physicist who toyed with the ideas of expansion. Both men recognized that the problems of gravity implied strange relationships, and when gravity met QED, these problems were intensified. Neither man proposed firm theories of expansion of any kind, since the math they had come to accept could not be resolved within a new theory of this sort. Both were tied very strongly to a set of equations, equations they had helped to create and fine-tune. Besides, despite being considered two of the towering geniuses of the 20th century, neither was mainly a theoretical physicist. They were mathematical physicists, much better with equations than with concepts.

In the 20th century, expansion was never a joke or an idea looked at only by crackpots and cranks. In various forms it was a viable alternative, an alternative that has now been accepted as Lambda, the cosmological constant. Current theory assigns expansion to space, which is not void but is made of things. Therefore current theory assigns expansion to matter. The main difference between my theory and current theory is that current theory assigns expansion to mythical animals like unicorn-strings and dragontail-loops, whereas my theory assigns expansion to protons and trees and stars.

MM's theory gives no characteristics to space. Space is quite literally space, so that filling it would be a logical contradiction. It doesn't move, expand, exert a force, supply a pressure, or make toast. It is an empty static grid that houses real, linear, deterministic little spheres, and that is all it is.

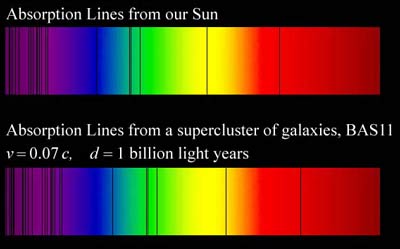

Hubble redshifts have traditionally shown that the universe is expanding. However, in the past decade or two, we have had another broad finding that not only do the redshifts increase with distance, they also appear to be increasing with time. This is now generally interpreted to mean the expansion is increasing, and to mean that the universe is open. We have been told that no other theory can explain these redshifts, but MM presents another possibility which does not fully contradict the Big Bang or expansion (yet).

Let us consider two broad facts, facts that have never been studied closely in this context, or studied as a pair. One, the Earth is on an outer arm of a large spiral galaxy. This means that the Earth and everything in its vicinity is moving in a large arc through the universe. Now, the light that is reaching the Earth from beyond the galaxy may also be moving in a large arc, due to the curvature of the entire universe, but the curvature of this arc must be much smaller than the arc of the Earth. The universe cannot be curving at the same rate as a single galaxy. Some will answer that light IS bent by the galaxy, since light is bent by all mass, but, again, the light cannot be bent as much as the Earth's motion is bent, since the light is moving so much faster (1,400 times faster). The Earth's bend could be seen just by looking at the galaxy from a distance, while the light's bend would not be obvious at all from the same vantage. No matter how you look at it, the Earth is moving in a tighter curve than the light reaching it from all sides.

Two, this great arc of the solar system as it revolves around the galactic center is not circular, but elliptical. This means that the curvature changes slowly over time. The solar apex, which measures the current direction of the Sun and its system relative to the galactic center, is currently at 60 degrees. If the current orbit were perfectly circular, then that angle would be 90 degrees. From this we can conclude that the Sun and its system are currently experiencing a greater arc than normal or average, and that therefore we could say the curvature is increasing. In other words, the Sun must be approaching one of the “ends” of its ellipse, the galactic equivalent of either perigee or apogee.

Now, these two facts taken separately don't point to much. But together they may suggest another cause of Hubble redshifts.

The first thing to notice in this regard is that the relationship of the curve of the Earth to the lesser curve of light is absolute: it doesn't change with regard to direction. The galactic motion isn't curving one way to light coming from one direction and curving the opposite way to light coming from another direction. The difference in curvature is an absolute. For example, draw two circles, one bigger than the other. The bigger one will have less curvature at all points, and this curve relationship is blind to direction. A curvature difference is an absolute. If this isn't clear, look at the cause of the Earth's curve, instead of the curve. The curve is caused by gravity. Well, light coming from all directions experiences the gravity of the Milky Way in the same way. Gravity doesn't curve in for light coming from the east and curve out for light coming from the west. It always curves in.

The second thing to notice is that we have a magnifying of this effect. The Earth isn't just curving with regard to the galactic center, it is also curving with regard to the Sun. The Earth is in two gravitational curves simultaneously, and it is curve is much greater than the distant light it receives. This may be important, since we can say the same thing about all of our telescopes to date. Even the ones that have left the Earth's surface have still been orbiting the Sun with us.

The third thing to notice is that we may have a second magnifying effect. The Earth is also rotating on its axis, so we have a tight curve about the center of the Earth. That makes a third curve that the light itself is not experiencing. The Hubble Space telescope would also be expected to experience this second magnifying effect, in fact even more, since it is orbiting once every 95 minutes.

One just has to prove that this difference in curvature would cause a redshift. This isn't hard to do, since gravitational fields are already known to cause redshifts, and this is admitted by the standard model. Curvature is the sign of gravity, so all straighter lines must be “less gravitational”, in at least one sense. A critic will say, “If the Earth is in two or three curved fields, by your definition, then isn't it downhill in two or three ways? And, if so, shouldn't it actually be blue-shifted two or three times? Gravitational redshifts require that the observer be uphill, as with the Earth observing a star like Sirius. This also applies to your crazy expansion theory, since if the Earth is expanding, it should be moving toward all incoming light. That is also a blue-shift!” That would be a the knee-jerk analysis here, but it can be answered.

The answer is that gravitational shifts like the ones my critic is talking about are very small. That is why they have been difficult to confirm. If they were as large as the Hubble shifts we are trying to explain here, they would have been easy to confirm. We would not have to study Sirius or fudge experiments such as Pound-Rebka on MM's site, we could get confirmation straight from the Sun. The Sun has a huge gravity field, and we should have found easy confirmation there. This is all to say that MM is not proposing that sort of uphill/downhill small effect. It would not help me here for many reasons: 1) it is too small, 2) it is blue instead of red. MM does not need to address Relativity, since gravity was already a curve before Einstein, and the main curve is all that is needed.

MM has shown that gravity is an acceleration, and any acceleration can be written or expressed as a curve. Just consider a Cartesian graph, where all accelerations are curves. A Cartesian graph is not relativistic. So we could have expressed Newton's gravity as a curved field if we had wanted to. Einstein's field is actually two curves stacked, but Newton's field was already the main curve, and that is what MM is unwinding here, in a novel way.

Clear your mind of redshifts, Doppler shifts, and gravitational redshifts and of Relativity, then all you have to accept is that we have real curves here, caused by orbiting. It turns out that any curve will measure any straighter line to be redshifted, supposing that line is a “line” of light or E/M radiation. The rather simple reason for this is due to the operation of measurement, and all we have to do is look at how we see distant objects using light. The important fact is that we do not measure at an instant. When we collect light from a distant object in a telescope, we take some sort of extended reading. It will usually not be too extended, since we want to avoid blurring, but we don't just collect one dt of light or something and do our calculations from that. No, we have an exposure time of some seconds or minutes, and that gives us our “observed wavelength.” Now, if we have our telescope on a planet that is rotating once every day, or on a low-orbit satellite that is orbiting once every 95 minutes, and that planet and satellite are orbiting a star which is orbiting a galactic center, that minute of light collection will be a curved minute. To the light, it will be non-negligibly curved, even over a short period of time. Remember, the light is moving extremely fast, so one minute allows for a lot of change in light. Light can change 18 million kilometers in one minute, so one minute is never negligible to light. The light we take in at the beginning of our minute is not strictly equivalent to the light we take in at the end of our minute, though our telescope is turning very slowly to accommodate the source. The curvature is registered in our collection, and it is registered as a redshift. It is registered as a redshift because the curve always creates some sideways motion to the light during the collection of the light.

Look at it this way. If I am moving in a curve relative to you, that just means that you can't measure me with only one dimension. You can't measure me using only x, you need x and y. Over any interval, I will be moving some amount sideways to you. If we reverse the measurement, this is also true. If I am moving in a curve relative to you, then you will be moving in a curve relative to me. I will define myself as motionless, or at least as uncurved, and I will apply the curvature to you. Therefore, I will have to measure you using both x and y. I cannot measure your motion with one dimension only. But of course this gives your motion a hypotenuse over every interval, and I have to triangulate to measure you.

Now, if we apply this pretty simple logic to a collection of light, it implies a blue shift only if I measure the same photon twice. For instance, if we could set up two detectors, one right behind the other, and the first detector could somehow make a detection without stopping the photon, then the two detectors would show a blue shift of that one photon as it traveled down. That would be the gravitational blue shift. But that is not what is happening with a real telescope, is it? It doesn't measure a shift on one photon, or even the shift of a set of photons, since it doesn't measure the same photons twice. No, it measures the first photons against the last photons. Since there has been a curve between the first photons and the last photons, there has been a sideways motion in that time, and it will look like the photons had to travel that hypotenuse. They will be stretched out by that curvature during the time of collection, and that stretching will be read as a redshift.

One might think that we so not have nearly enough motion or curvature to show the kind of redshifts that we see, even if we gave you an hour or a hundred hours! These hypotenuses are tiny, and we could ignore them. Not true. The solar system is moving 220km/s in its orbit around the galactic center, and that the Earth is moving another 30km/s in orbit around the Sun, and that the Earth is moving another .5km/s around its own center. Those are some pretty spectacular speeds, especially the first one, which is c/1,400. Using just that speed, we can create an extra distance in one minute of 13,200km. That is not the kind of curvature you can ignore.

This analysis would seem to imply that the greater exposure time we have, the greater redshift we should find. Not true. To find those hypotenuses, we compare the sideways motion due to curvature during the time of collection or exposure to the distance light travels in that same time. If we take a smaller time of exposure, we also have a smaller distance traveled by light in that time, so when we calculate a percentage change, it doesn't matter what time we actually expose. What matters is the curvature of the observer relative to the curvature of the light. Thus, curvature can cause a redshift by creating sideways motion relative to light and thus light from more distant sources would be shifted more and this would imply that distant light is curved less. Since, in general, the universe is curved more at smaller scales than at larger scales. General Relativity shows us that the universe as a whole is curved, but the universe is not as curved as a galaxy, and a galaxy is not as curved as a star. The curvature decreases at larger scales, by definition. If the curvature increased for larger scales, the larger scales could not be larger, could they? They would be pulled back on themselves, and would be smaller. Therefore, light traveling longer distances can be considered to be “larger-scale” light. Its total bend may be quite large, but its bend per unit length is less than nearer light, simply because it got here from so far away. If it had the same bend per distance traveled as nearer light, it could not have gotten here from there. More distant light is bent less by a tautology, since to get here from there, it had to travel a straighter path. The universe is curved less at larger scales, and it is the universe that determines the path of the light. Light diverted by smaller scale curvatures could not have gotten here from so far away.

Since more distant light is curved less, the difference between its own curvature and our curvature will be greater. Therefore our sideways motion relative to it will be greater, and the redshift will be greater.

In a similar way, we can explain the increase in redshift discovered by the Hubble telescope. As shown above the Sun and its system are currently in a phase of increased curvature, due simply to the elliptical nature of the galactic orbit. This means that this acceleration of the redshift is temporary. As soon as the Sun passes whichever end of the ellipse it is approaching, this acceleration of the cosmic redshift will switch to a deceleration.

Having addressed the main points of my theory, MM will now try to reintegrate the Cosmic Background Radiation (CBR) into what he discovered about redshifts. If redshifts are caused by curvature, then we can explain the CBR with curvature instead of expansion. Everything that has been said about other light applies equally to the CBR. We only have to keep our proposal that the CBR is, on average, coming from a great distance. Since MM has proposed that it is the universal background charge field, rather than any local charge field, this is not difficult to do. As the ambient charge field of the universe, its average distance would be very large. In fact, using my equations above, we could even take the average out a bit. To match my charge field calculations more closely, we need the number for z to be about 11, which is well beyond the 14 billion year limit.

Thus appears to be a strange mixture of old theory and new here, since values of z have to be read in terms of Doppler redshifts, not curvature redshifts. MM assumes that curvature would have to follow distance in much the same way that expansion did, since he is not overthrowing General Relativity. MM does not believe that more distant objects are moving away from us, but he does believe the light they send us is curving less, and that belief is due to a general agreement with GR. In other words, Doppler changes are caused by distance, and so is my new curvature change. Disagreeing with the way the Doppler changes are calculated, MM simply lets his curves change at the same rate as the redshift, and in this way he obtains a first estimate of the distance.

Celestial mechanics lacks a balancing mechanism. Specifically, orbits show a degree of float that is completely unexplained by current theory, either Kepler's, Newton's, or Einstein's. In opening up Newton's gravitational equation, MM has found the E/M contained within, signaled by G. (See Newton's law is a Unified Field of Gravity and E/M and G is the Key to the Secret of Gravity.) What this has allowed me to do is to show very simply that the gravity field is and always has been two fields masquerading as one. Of course, this means that Einstein's field equations are also unified field equations, expressing both E/M and gravity at the same time. This makes every orbit a balancing of three motions, not two, which allows for stability and correction. It also explains resonances, torques, and many other things, in strictly mechanical ways. MM has recently discovered that this same finding solves the problem of the cosmological constant, since the instability of the orbit and the instability of Einstein's field equations is caused by the same thing, and solved by the same thing. The reason Einstein's solutions were unstable is that he was unaware of the E/M field inside his equations. He was unaware that Newton's field, which he was extending and correcting, was already two fields. So when physicists—and Einstein himself—discovered that his equations were unstable, what they were really discovering is that the orbit is unstable. They were uncovering a problem that had been hidden for centuries.

This instability is explained in the paper Solution to the Ellipse problem of Kepler's and Newton's orbits are unstable, and have been from the beginning. But everyone had forgotten that, if they had ever realized in the first place. Newton's own field equations were not stable, because any bad differential would doom them. Orbits could be summed, to show a circuit, but the differentials themselves could never be explained. Any dt of imbalance should doom an orbit, according the historical and present equations, but orbits correct themselves somehow. Underneath the differential equations, the “innate velocity” of the orbiter makes corrections upon itself, though this is physically impossible in gravity-only theory.

Now, when Einstein added time differentials to Newton, and used tensors to express curvature, all this was buried a second time. Everyone thought that Einstein's equations had developed a new instability, when all they were doing is expressing an old instability in a new way. Einstein tried to correct this old instability by creating a constant that would force a new stability on the equations by fiat, but it turned out that data was even then coming in that showed instability. Hubble's data appeared to show an expansion, so the constant was changed to match this expansion. It has been changed several times since then to match newer values of expansion.

Once we realize that Einstein's field equations contain the E/M field, we can show how the stability is created by the mechanics of the two fields. In the paper "Solution to the Ellipse problem" above, MM showed that the orbit is really stable for a reason, since the E/M field creates that stability by responding to changes in the gravity field or to changes in position. And when we open up Einstein's field equations and see the E/M field there, we get the same stability. Newton's and Kepler's equations only seemed to be unstable, because they had been mechanically undefined, or under-defined. In the same way, Einstein's field equations only seem to be unstable, because they have not been recognized as unified field equations. Yes, Einstein never realized that his equations were already unified. The solution was hiding in plain sight.

This means that the cosmological constant is just a fudge, no matter what its value is. Einstein's equations don't require any constant. They require a subtle reworking and re-defining, so that we see that some of the rates of change of some of the tensors are defined by the E/M field, working in tandem with the gravity field. Once we see this, and express this correctly, the equations will be self-correcting. It is the charge field that allows for this correction and unification of both Newton's and Einstein's equations.

The Big Bang and expansion have been closely tied to the field equations and the value of the cosmological constant from the beginning. Having thrown out expansion and the current explanation of the CBR, it should be clear that the cosmological constant is a ghost. There is no constant and no field instability, since the field equations, like the orbit, can be fully stabilized in a natural way, by the charge field. We don't fill Einstein's hole with a constant, we fill it with the charge field.

First posted November 14, 2011

The vacuum catastrophe or cosmological constant problem is still called the worst prediction in history.

Particle physicists predicted a value for the cosmological constant that is about 120 orders of

magnitude larger than the current measurement, and no one knows how to solve this problem.

Wikipedia includes it in several of their sidebars as an “unsolved problem in physics.” MM will solve it

for you very quickly and simply.

Once again, we start with my discovery that Newton's equation is a unified field equation. (See Newton's law is a Unified Field of Gravity and E/M.) The old

equation F=GMm/R2 is a unified field equation. That is to say, the equation includes the charge field or

the foundational E/M field, and G is the scaling constant between the two fields. The fields are in

vector opposition, and F gives us the result of the two fields.

Likewise, Coulomb's equation F=kqq/R is also a unified field equation, with k as the scaling constant.

Coulomb's equation includes gravity, and F is the result of the two fields. Since quantum mechanics,

like Coulomb's equation, is based on charge, and since what is called charge in quantum mechanics

includes gravity, quantum mechanics is also a unified field. It already includes gravity. That is why it

has been impossible to unify it with gravity. You can't re-unify something that is already unified.

If we rerun the fundamental equations with this new knowledge, we find that the charge force on the

electron is not 8.2 x 10-8N, it is 8.9 x 10-30 , a difference of about 1022 .

What caused this huge error was using the constant k to calculate the force. In Coulomb's equation, the constant k is a scaling constant between the quantum level and the macrolevel (the size of Coulomb's

pith balls). It gives us the scaled field charge from the local charge. But when calculating the force on

the electron, we are already at the quantum level, so we don't need the scaler. To find the correct

answer from Coulomb's equation, you must jettison k and add the charges (instead of multiplying

them). (See Coulomb's equation is a Unified Field equation in disguise and Gravity at the Quantum Level.)

If we un-unify the E/M field equations (the quantum equations), pulling them apart into their two

constituent fields, we find that the charge force is much smaller than we have been told. The

gravitational force between proton and electron makes up the difference, giving us the same data but a

vastly different unified field. Meaning, gravity is 1022 stronger at the quantum level than we thought.

In Gravity at the Quantum Level MM showed this to be the greatest error in quantum mechanics and it is

this same error that is causing the vacuum catastrophe.

But where does current theory get the 120 orders of magnitude failure? From here: Well, we have seen that gravity is 1022 stronger and E/M is 1022 weaker, so we already have a 1044

correction to that calculation. But we still have the strong and weak forces, right? Not really, since MM

have shown that the weak force is not a fundamental or field force. It is simply a variation in E/M

during a decay, so it doesn't enter this problem. And MM has shown that the strong force is a ghost . It

doesn't exist at all. Therefore we already have enough to solve. (See The Weak force is not a Force but a set of Collisions and The Nucleus is kept together by Gravity; there is no Strong Force)

To solve, we remember that gravity is supposed to be 1038 weaker than E/M at the quantum level. But

if we change that by 1044, then gravity is now stronger by 106. If we again seek “ the energy at which the

gravitational interaction becomes as strong as E/M”, then we find we are way below 1019GeV. In fact, we

see that we must go below the quantum level itself, since gravity is still stronger than E/M at the

quantum level. This means the energy is less than 1eV (the basic energy of the quantum level), which

is a correction already of 1028. According to my theory, we have to go down to the size of the photon to

equalize E/M and gravity, because at that level both are zero. There is no charge at the level of the

photon, because the photon is what creates charge. Well, what energy are we at there? About 10 -21J, or

10-2 eV. Which takes our correction to about 1030 . Since the zero-point energy density is developed

from the Planck mass Mp4 , our correction is that original correction to the fourth power, which is 10120.

As usual, that is just the quick and rough math, to show you the mechanics. As a theorist, MM is mainly

interested in making you see the motions and the mechanics, and less interested in piling big equations

systems.

In MM's paper on his site on the Casimir Effect, he showed that there

is no zero-point energy. You can't take this problem below the level of the charge photon, so talking

about “zeros” or “points” is pointless. The baseline energy of the unified field isn't found by taking the

field equations to a limit or toward zero. The baseline energy of the unified field is found at the level

of the charge photon, for strictly logical reasons. You can't take the unified field equations below the

charge photon, because there is no unified field below the charge photon. The charge photon causes

the field, so beneath the charge photon, there can be no charge and no field.

This also explains why the Planck mass and energy have never seemed to fit the Planck length and

time. At the Planck scale, the time is 10-44s and the length is 10-35m, but the mass and energy are

relatively huge, being 1019GeV and 10-8 kg. We have always wondered how 1019GeV fit 10-44s, and

now we see that it doesn't. The Planck energy is way too large, and it was caused by this mistaken

scaling of gravity and E/M. In addition, it turns out the Planck scale is really just the photon scale,

since in Unifying the Photon with other Quanta MM has shown that the charge photon has a radius of about 10-24m and a mass of about 10-37 kg.

If we use these numbers instead of the old Planck scale ones, we get the right value for the so-called

zero-point energy, since zero-point energy is just charge field energy in space. That said, we haven't

seemed to recognize that this charge field energy can't be measured at its minimum without flying out

of the galaxy. The charge field within the galaxy must be measurably higher than outside it, so data

from Voyager is going to pretty useless. Voyager will be picking up charge from the Sun and planets as

well as the rest of the galaxy, so any “baseline” measured in the solar system is still going to be quite

high.

That solves the vacuum catastrophe, but it doesn't solve the cosmological constant problem. With my

unified field equations, the cosmological constant problem simply disappears, because with a unified

field we don't require such a constant. It is not the constant that resists gravity, it is the E/M field that

resists gravity. In my equations, charge and gravity oppose one another at all levels, and this creates

the balance in the unified field. There is no cosmological constant and no dark matter. There is only

baryonic matter and photonic matter. Of course the current value of the CC is also caused by values for

Hubble expansion and for accelerating expansion, but we won't get into that here. Regardless, the so-

called “space pressure” can no longer be applied to space. The pressure that balances gravity does not

come from space, it comes from photons and charge.

Clearly, the standard model has existed with this huge hole

in it for decades or centuries. The mainstream sells it as a relatively recent problem, but it has existed

ever since the force on the electron was first calculated. This means that it has existed since the time of

Coulomb. Particle physicists inherited it from Faraday and Maxwell, and kept it under wraps for a long

time. Even now, it is pretty well hidden. Whenever they tell you that QED is the most successful

theory ever, that it matches prediction to fantastic levels, that it is bedrock, that it is a miracle, and so

on, they never seem to remember this failure. Given its size and the fact that it has polluted everything

around it, it is astonishing that particles physicists have been able to

maintain such incredible levels of salesmanship.

We can see this by looking for a moment at Weinberg's long tinkering with the cosmological constant.

(See The Weak force is not a Force but a set of Collisions.) We just saw Weinberg trying desperately to use the anthropic principle to push the CC prediction in line

with the facts, in MM's paper on his site the Susskind/Smolin debate on the Anthropic Principle . In a nutshell, he proposed that the

quantum prediction might not be wrong, as a universal average. The reason it is so low here, he said, is

that it needs to be low to support life. This region of the universe that we measure must have a low CC,

or we wouldn't be here to measure it. All very clever, except that now we can see it was just a push.

The CC isn't low because it is supporting life here, it is low because the old math and theory was a

hash. The old equations had gravity 1022 too small and E/M 1022 too large.

With that in mind, we may reconsider all the hogwash we have heard over the past seven or eight

decades, about phonons and borrowing from the vacuum and symmetry breaking and virtual particles

and so on and on. Just as Weinberg was trying to fill holes in desperately bad equations, most other

modern physicists have been doing the same thing, regarding other equally bad equations. We do not need a lot of philosophical gibberish or a lot of “creative” solutions; we need to

rewrite the old equations. That is physics the

old way.

* Cavity Quantum Electrodynamics , Sergio Dutra, p. 63. See Google Books online.

Please note that this paper is a simplification by me of a paper or papers written and copyrighted by Miles Mathis on his site. I have replaced "I" and "my" with "MM" to show that he is talking. All links within the papers, not yet simplified, are linked directly to the Miles Mathis site and will appear in another tab. (It will be clear which of these are Miles Mathis originals because they will be still contain "I" and "my".) The original papers on his site are the ultimate and correct source. All contributions to his papers and ordering of his books should be made on his site.

(This paper incorporates sections of Miles Mathis' third4 paper and hubb paper and the complete catas paper.)

The Cosmological Constant

Hubble Redshifts

The Vacuum Catastrophe

To estimate the gravitational effect of the electromagnetic zero-point energy predicted by theory, we can adopt the

Planck energy as a cutoff. This is the energy at which the gravitational interaction becomes as strong as the other

three fundamental forces of nature (i.e. the scale at which we expect the current theory to break down). This

energy is about 1019GeV. This yields a zero-point energy density of about 1019GeV/m3. *